4 0 0 0 OA 医用工学と電気化学 1.腫瘍への電磁的ターゲディング

- 著者

- 根岸 直樹 野崎 幹弘 岡野 光夫

- 出版者

- 公益社団法人 電気化学会

- 雑誌

- 電気化学および工業物理化学 (ISSN:03669297)

- 巻号頁・発行日

- vol.62, no.5, pp.370-375, 1994-05-05 (Released:2019-09-15)

- 著者

- 根岸 直樹 相吉 英太郎 羽深 嘉宣

- 出版者

- 公益社団法人 計測自動制御学会

- 雑誌

- 計測自動制御学会論文集 (ISSN:04534654)

- 巻号頁・発行日

- vol.29, no.10, pp.1203-1212, 1993-10-31 (Released:2009-03-27)

- 参考文献数

- 9

- 被引用文献数

- 2

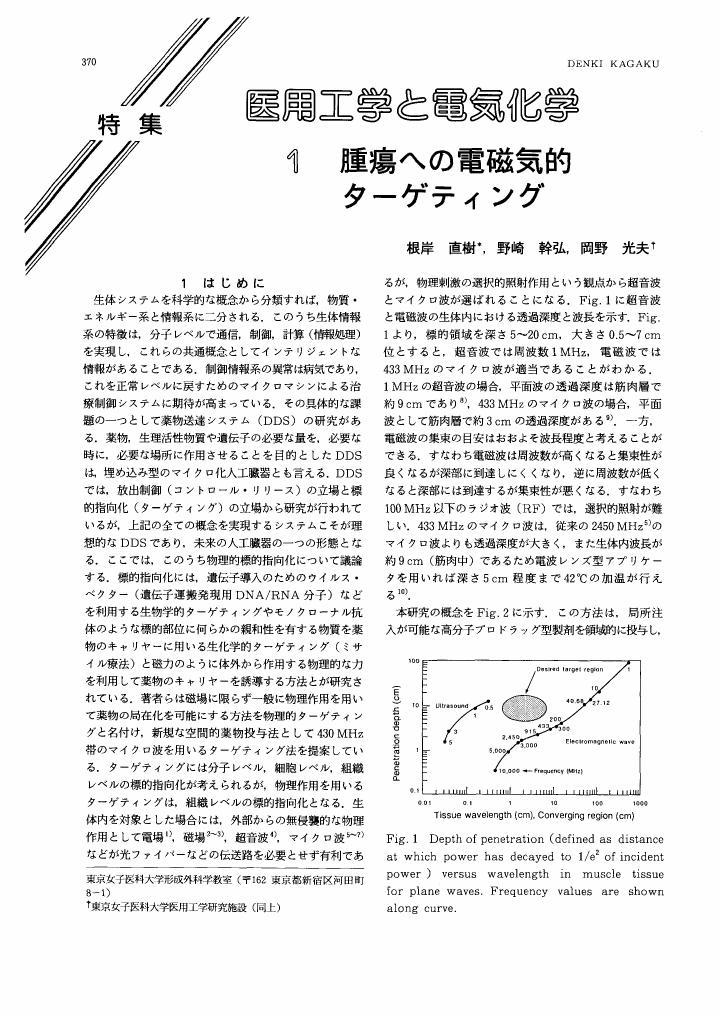

There exist many learning algorithms for finding the synaptic weights of a layered neural network so as to minimize the output errors in response to the plural training data. The most well-known learning algorithms are the backpropagation procedures, in which there are two manners of minimizing the plural error functions in practice. One is minimizing the sum of all error functions totally, and the other is making a reduction of only one error function chosen temporarily and cyclically at each iteration. The former does not always keep the balance and the efficiency in reduction of the plural output errors, and the latter does not guarantee the convergence from the standpoint of numerical analysis.In this paper, we then adopt the learning manner of minimizing the maximum valued function among the all error functions in order to reduce the output errors based on all training data uniformly, and propose a learning algorithm using a non-differentiable optimization method with the generalized gradient in order to guarantee the convergence. An application to the simple recognition problem with plural training patterns and its simulation results show efficiency of our learning manner and algorithm.