2 0 0 0 OA イヌにおけるヒトの性別認識

- 著者

- 高岡 祥子 森崎 礼子 藤田 和生

- 出版者

- 公益社団法人 日本心理学会

- 雑誌

- 日本心理学会大会発表論文集 日本心理学会第72回大会 (ISSN:24337609)

- 巻号頁・発行日

- pp.2AM113, 2008-09-19 (Released:2018-09-29)

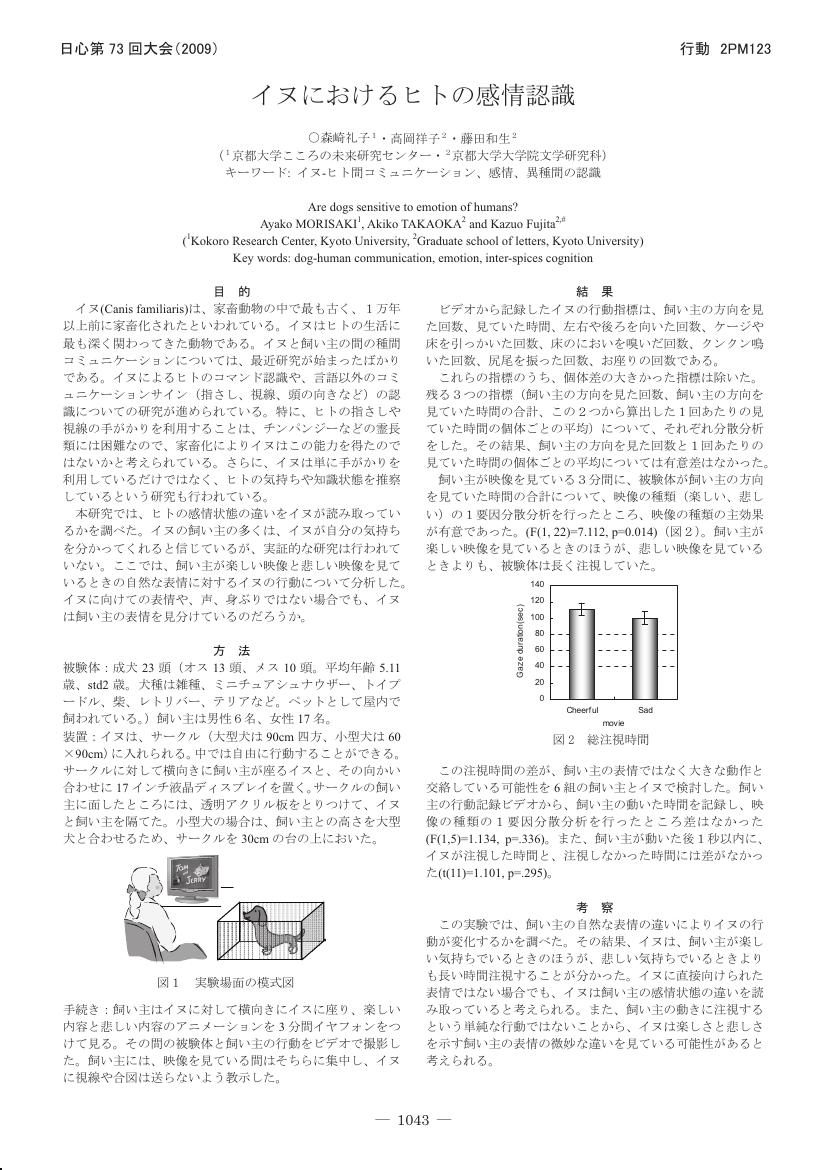

2 0 0 0 OA イヌにおけるヒトの感情認識

- 著者

- 森崎 礼子 高岡 祥子 藤田 和生

- 出版者

- 公益社団法人 日本心理学会

- 雑誌

- 日本心理学会大会発表論文集 日本心理学会第73回大会 (ISSN:24337609)

- 巻号頁・発行日

- pp.2PM123, 2009-08-26 (Released:2018-11-02)

2 0 0 0 OA イヌ(Canis familiaris) におけるヒトの性別の感覚統合的概念

- 著者

- 高岡 祥子 森崎 礼子 藤田 和生

- 出版者

- THE JAPANESE SOCIETY FOR ANIMAL PSYCHOLOGY

- 雑誌

- 動物心理学研究 (ISSN:09168419)

- 巻号頁・発行日

- vol.63, no.2, pp.123-130, 2013 (Released:2013-12-17)

- 参考文献数

- 27

- 被引用文献数

- 3 3

Previous studies suggest that nonhuman animals form concepts that integrate information from multiple sensory modalities such as vision and audition. For instance, Adachi, Kuwahata, and Fujita (2007) demonstrated that dogs form auditory-visual cross-modal representation of their owner. However, whether such multi-modal concepts would expand to more abstract, or collective, ones remains unknown. To answer the question, we tested whether dogs were sensitive to congruence of human genders suggested by the voice and the face of an unfamiliar person. We showed to the dogs a photograph of a male or female human face on the monitor after playing a voice of a person either matching or mismatching in gender. Dogs looked at the photograph for longer duration when the auditory stimuli were incongruent than when they were congruent; that is, expectancy violation was suggested. This result suggests that dogs spontaneously associate auditory and visual information to form a cross-modal concept of human gender. This is the first report showing that cross-modal representation in nonhuman animals expands to an abstract social category.