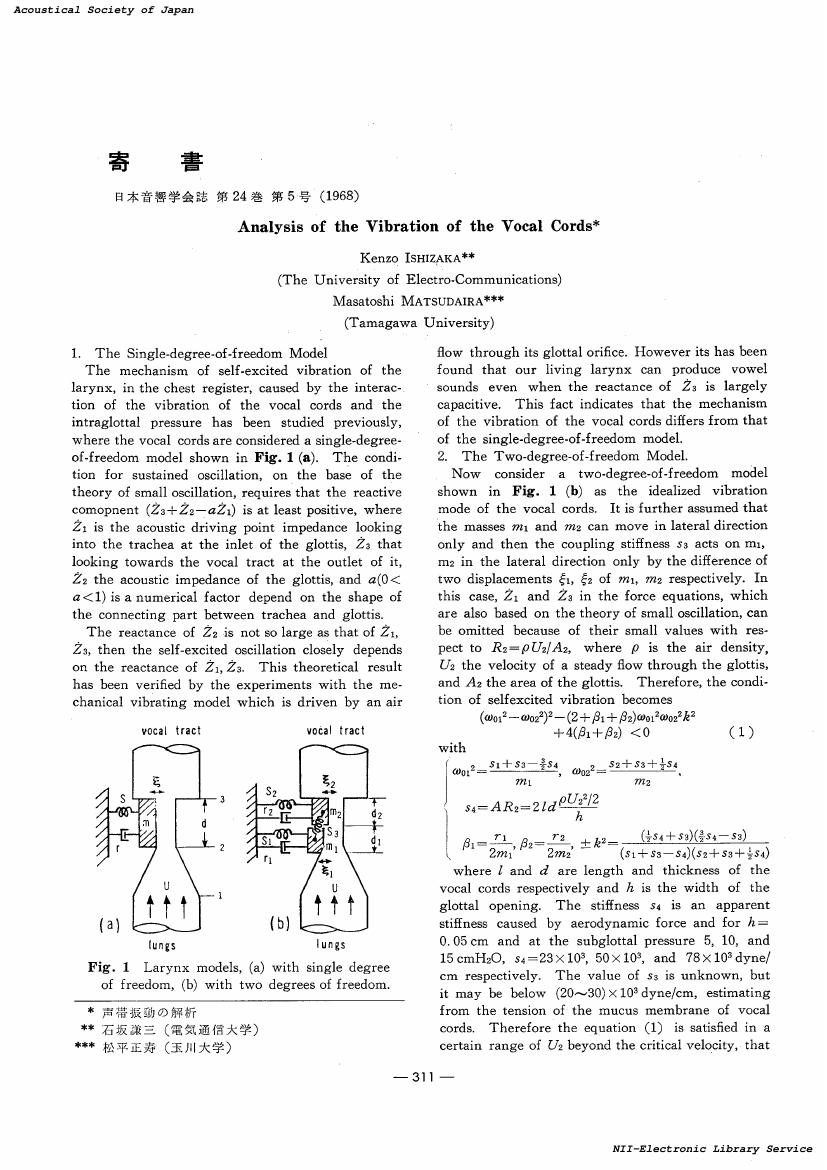

1 0 0 0 OA 声帯振動の解析

- 著者

- 石坂 謙三 松平 正寿

- 出版者

- Acoustical Society of Japan

- 雑誌

- 日本音響学会誌 (ISSN:03694232)

- 巻号頁・発行日

- vol.24, no.5, pp.311-312, 1968-09-30 (Released:2017-06-02)

- 著者

- Shigeaki Amano Hideki Kawahara Hideki Banno Katuhiro Maki Kimiko Yamakawa

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.43, no.2, pp.105-112, 2022-03-01 (Released:2022-03-01)

- 参考文献数

- 30

A perception experiment and analyses were conducted to clarify the acoustic features of pop-out voice. Speech items pronounced by 779 native Japanese speakers were prepared for stimuli by mixing them with a babble noise that consisted of overlapping short sentences spoken by 10 Japanese speakers. Using a 5-point scale, 12 Japanese participants rated the pop-out score of the speech items listened using headphones. The scores and acoustic features of the speech items were investigated using correlation analysis and principal coordinate analysis. It was revealed that the pop-out score relates to the acoustic features such as overall intensity, relative intensity in the high-frequency range, fundamental frequency, dynamic feature of the spectrum, and a spectrum shape in high frequency. The results suggest that these are crucial acoustic features for the pop-out voice.

- 著者

- Kimiko Yamakawa Shigeaki Amano Mariko Kondo

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.43, no.5, pp.241-250, 2022-09-01 (Released:2022-09-01)

- 参考文献数

- 27

Vietnamese speakers' mispronunciations of Japanese singleton and geminate stops were identified using the category boundary of the stops pronounced by native Japanese speakers. To clarify the characteristics of the Vietnamese speakers' mispronunciations, their speech segment durations were analyzed. In comparison with native Japanese speakers' correct pronunciations, Vietnamese speakers mispronounced a singleton stop with a longer closure and a shorter preceding consonant-vowel segment, whereas they mispronounced a geminate stop with a shorter closure and a longer following consonant-vowel segment. These results were consistent with the findings of Korean, Taiwanese Mandarin, and Thai speakers in previous studies, suggesting that non-native speakers may have a common tendency to have inadequate durations of closure and anteroposterior consonant-vowel segments in mispronunciations of Japanese singleton and geminate stops.

- 著者

- Seiji Adachi

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.25, no.6, pp.400-405, 2004 (Released:2004-11-01)

- 参考文献数

- 41

- 被引用文献数

- 4 6

This paper presents an outline of the sound production mechanisms in wind instruments and reviews recent progress in the research on different types of wind instruments, i.e., reed woodwinds, brass, and air-jet driven instruments. Until recently, sound production has been explained by models composed of lumped elements, each of which is often assumed to have only a few degrees of freedom. Although these models have achieved great success in understanding the fundamental properties of the instruments, recent experiments using elaborate methods of measurement, such as visualization, have revealed phenomena that cannot be explained by such models. To advance our understanding, more minute models with a large degree of freedom should be constructed as necessary. The following three different phenomena may be involved in sound production: mechanical oscillation of the reed, fluid dynamics of the airflow, and acoustic resonance of the instrument. Among them, our understanding of fluid dynamics is the most primitive, although it plays a crucial role in linking the sound generator with the acoustic resonator of the instrument. Recent research has also implied that a rigorous treatment of fluid dynamics is necessary for a thorough understanding of the principles of sound production in wind instruments.

- 著者

- Tsubasa Sakai Daisuke Morikawa Parham Mokhtari

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.43, no.3, pp.213-215, 2022-05-01 (Released:2022-05-01)

- 参考文献数

- 7

- 著者

- Seiji Adachi

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.38, no.1, pp.14-22, 2017-01-01 (Released:2017-01-01)

- 参考文献数

- 23

- 被引用文献数

- 2 2

A minimal model explaining intonation anomaly, or pitch sharpening, which can sometimes be found in baroque flutes, recorders, shakuhachis etc. played with cross-fingering, is presented. In this model, two bores above and below an open tone hole are coupled through the hole. This coupled system has two resonance frequencies ω±, which are respectively higher and lower than those of the upper and lower bores ωU and ωL excited independently. The ω± differ even if ωU= ωL. The normal effect of cross-fingering, i.e., pitch flattening, corresponds to excitation of the ω--mode, which occurs when ωL⪆ωU and the admittance peak of the ω--mode is higher than or as high as that of the ω+-mode. Excitation of the ω+-mode yields intonation anomaly. This occurs when ωL⪅ωU and the peak of the ω+-mode becomes sufficiently high. With an extended model having three degrees of freedom, pitch bending of the recorder played with cross-fingering in the second register has been reasonably explained.

- 著者

- Tawhidul Islam Khan Md. Mehedi Hassan Moe Kurihara Shuya Ide

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.42, no.5, pp.241-251, 2021-09-01 (Released:2021-09-01)

- 参考文献数

- 22

- 被引用文献数

- 2

Osteoarthritis (OA) of the knee is a widespread disease caused by the articular cartilage damage, and its prevalence has become a severe public health problem worldwide, especially in the ageing society. Although X-ray, MRI, CT, etc. are commonly used to examine knee OA by inserting external high energy into the body, they do not provide dynamic information on knee joint integrity. In the present research, the acoustic emission (AE) technique has been applied in healthy individuals as well as OA patients in order to evaluate the knee integrity in dynamic analysis modes without inserting any external energy. Four groups of people, young, middle-aged, older, and OA patient have been participated in the present research, and significant results have been identified. It has been found that the degeneration of the articular cartilage progresses gradually with the increase of the age. The angular positions of knee damage are also evaluated by clarifying AE hits. The results are verified through clinical investigations by an orthopedic surgeon applying X-Ray and MRI techniques. The results of the present research demonstrate that the AE technique can be considered as a promising tool for the diagnosis of knee osteoarthritis.

- 著者

- Kaoru Ashihara

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.27, no.6, pp.332-335, 2006 (Released:2006-11-01)

- 参考文献数

- 14

When two or more tones are presented simultaneously, a listener can sometimes hear other tones that are not present. These other tones called ‘combination tones’ are thought to be induced by nonlinear activities in the inner ear. It is difficult to demonstrate this phenomenon because a listener cannot easily distinguish combination tones from primary tones. This paper introduces a unique method called the ‘sweep tone method’ by which combination tones can be perceptually distinguished from primary tones relatively easily. The importance of the non-linear characteristics of the intact auditory system is described.

- 著者

- Ahnaf Mozib Samin M. Humayon Kobir Shafkat Kibria M. Shahidur Rahman

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.42, no.5, pp.252-260, 2021-09-01 (Released:2021-09-01)

- 参考文献数

- 25

- 被引用文献数

- 3

Research in corpus-driven Automatic Speech Recognition (ASR) is advancing rapidly towards building a robust Large Vocabulary Continuous Speech Recognition (LVCSR) system. Under-resourced languages like Bangla require benchmarking large corpora for more research on LVCSR to tackle their limitations and avoid the biased results. In this paper, a publicly published large-scale Bangladeshi Bangla speech corpus is used to implement deep Convolutional Neural Network (CNN) based model and Recurrent Neural Network (RNN) based model with Connectionist Temporal Classification (CTC) loss function for Bangla LVCSR. In experimental evaluations, we find that CNN-based architecture yields superior results over the RNN-based approach. This study also emphasizes assessing the quality of an open-source large-scale Bangladeshi Bangla speech corpus and investigating the effect of the various high-order N-gram Language Models (LM) on a morphologically rich language Bangla. We achieve 36.12% word error rate (WER) using CNN-based acoustic model and 13.93% WER using beam search decoding with 5-gram LM. The findings demonstrate by far the state-of-the-art performance of any Bangla LVCSR system on a specific benchmarked large corpus.

- 著者

- Kenji Kobayashi Yoshiki Masuyama Kohei Yatabe Yasuhiro Oikawa

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.42, no.5, pp.261-269, 2021-09-01 (Released:2021-09-01)

- 参考文献数

- 42

- 被引用文献数

- 1

Phase recovery is a methodology of estimating a phase spectrogram that is reasonable for a given amplitude spectrogram. For enhancing the signals obtained from the processed amplitude spectrograms, it has been applied to several audio applications such as harmonic/percussive source separation (HPSS). Because HPSS is often utilized as preprocessing of other processes, its phase recovery should be simple. Therefore, practically effective methods without requiring much computational cost, such as phase unwrapping (PU), have been considered in HPSS. However, PU often results in a phase that is completely different from the true phase because (1) it does not consider the observed phase and (2) estimation error is accumulated with time. To circumvent this problem, we propose a phase-recovery method for HPSS using the observed phase information. Instead of accumulating the phase as in PU, we formulate a local optimization model based on the observed phase so that the estimated phase remains similar to the observed phase. The analytic solution to the proposed optimization model is provided to keep the computational cost cheap. In addition, iterative refinement of phase in the existing methods is applied for further improving the result. From the experiments, it was confirmed that the proposed method outperformed PU.

- 著者

- Hikichi Takafumi Osaka Naotoshi

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.23, no.1, pp.25-27, 2002 (Released:2002-02-08)

- 参考文献数

- 6

- 被引用文献数

- 1 1

1 0 0 0 OA 時領域における音声のピッチ抽出の一方式

- 著者

- 藤崎 博也 田辺 吉久

- 出版者

- Acoustical Society of Japan

- 雑誌

- 日本音響学会誌 (ISSN:03694232)

- 巻号頁・発行日

- vol.29, no.7, pp.418-419, 1973-07-01 (Released:2017-06-02)

- 著者

- Kaoru Sekiyama

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.41, no.1, pp.37-38, 2020-01-01 (Released:2020-01-06)

- 参考文献数

- 10

Speech perception is often audiovisual, as demonstrated in the McGurk effect: Auditory and visual speech cues are integrated even when they are incongruent. Although this illusion suggests a universal process of audiovisual integration, the process has been shown to be modulated by language backgrounds. This paper reviews studies investigating inter-language differences in audiovisual speech perception. In these examinations with behavioral and neural data, it is shown that native speakers of English use visual speech cues more than those of Japanese, with different neural underpinnings for the two language groups.

- 著者

- Catherine Stevens

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.25, no.6, pp.433-438, 2004 (Released:2004-11-01)

- 参考文献数

- 61

- 被引用文献数

- 7 13

This review describes cross-cultural studies of pitch including intervals, scales, melody, and expectancy, and perception and production of timing and rhythm. Cross-cultural research represents only a small portion of music cognition research yet is essential to i) test the generality of contemporary theories of music cognition; ii) investigate different kinds of musical thought; and iii) increase understanding of the cultural conditions and contexts in which music is experienced. Converging operations from ethology and ethnography to rigorous experimental investigations are needed to record the diversity and richness of the musics, human responses, and contexts. Complementary trans-disciplinary approaches may also minimize bias from a particular ethnocentric view.

- 著者

- Rieko Kubo Reiko Akahane-Yamada

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.27, no.1, pp.59-61, 2006 (Released:2006-01-01)

- 参考文献数

- 6

- 被引用文献数

- 1 2

- 著者

- Husne Ara Chowdhury Mohammad Shahidur Rahman

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.42, no.2, pp.93-102, 2021-03-01 (Released:2021-03-01)

- 参考文献数

- 29

- 被引用文献数

- 3

The magnitude spectrum is a popular mathematical tool for speech signal analysis. In this paper, we propose a new technique for improving the performance of the magnitude spectrum by utilizing the benefits of the group delay (GD) spectrum to estimate the characteristics of a vocal tract accurately. The traditional magnitude spectrum suffers from difficulties when estimating vocal tract characteristics, particularly for high-pitched speech owing to its low resolution and high spectral leakage. After phase domain analysis, it is observed that the GD spectrum has low spectral leakage and high resolution for its additive property. Thus, the magnitude spectrum modified with its GD spectrum, referred to as the modified spectrum, is found to significantly improve the estimation of formant frequency over traditional methods. The accuracy is tested on synthetic vowels for a wide range of fundamental frequencies up to the high-pitched female speaker range. The validity of the proposed method is also verified by inspecting the formant contour of an utterance from the Texas Instruments and Massachusetts Institute of Technology (TIMIT) database and standard F2–F1 plot of natural vowel speech spoken by male and female speakers. The result is compared with two state-of-the-art methods. Our proposed method performs better than both of these two methods.

- 著者

- Seiji Nakagawa

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.41, no.6, pp.851-856, 2020-11-01 (Released:2020-11-01)

- 参考文献数

- 36

Although the mechanisms involved remain unclear, several studies have reported that bone-conducted ultrasounds (BCUs) can be perceived even by those with profound sensorineural hearing impaired, who typically hardly sense sounds even with conventional hearing aids. We have identified both the psychological characteristics and the neurophysiological mechanisms underlying the perception of BCUs using psychophysical, electrophysiological, vibration measurements and computer simulations, and applied to a novel hearing aid for the profoundly hearing impaired. Also, mechanisms of perception and propagation of the BCU presented to distant parts of the body (neck, trunk, upper limb) were investigated.

- 著者

- Kenji Kurakata Tazu Mizunami Kazuma Matsushita

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.34, no.1, pp.26-33, 2013-01-01 (Released:2013-01-01)

- 参考文献数

- 20

- 被引用文献数

- 2 7

The sensory unpleasantness of high-frequency sounds of 1 kHz and higher was investigated in psychoacoustic experiments in which young listeners with normal hearing participated. Sensory unpleasantness was defined as a perceptual impression of sounds and was differentiated from annoyance, which implies a subjective relation to the sound source. Listeners evaluated the degree of unpleasantness of high-frequency pure tones and narrow-band noise (NBN) by the magnitude estimation method. Estimates were analyzed in terms of the relationship with sharpness and loudness. Results of analyses revealed that the sensory unpleasantness of pure tones was a different auditory impression from sharpness; the unpleasantness was more level dependent but less frequency dependent than sharpness. Furthermore, the unpleasantness increased at a higher rate than loudness did as the sound pressure level (SPL) became higher. Equal-unpleasantness-level contours, which define the combinations of SPL and frequency of tone having the same degree of unpleasantness, were drawn to display the frequency dependence of unpleasantness more clearly. Unpleasantness of NBN was weaker than that of pure tones, although those sounds were expected to have the same loudness as pure tones. These findings can serve as a basis for evaluating the sound quality of machinery noise that includes strong discrete components at high frequencies.

- 著者

- Yizhen Zhou Yosuke Nakamura Ryoko Mugitani Junji Watanabe

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.42, no.1, pp.36-45, 2021-01-01 (Released:2021-01-01)

- 参考文献数

- 39

- 被引用文献数

- 1

The goal of this study was to demonstrate the influence of prior auditory and visual information on speech perception, using a priming paradigm to investigate the shift in the perceptual boundary of geminate consonants. Although previous research has shown visual information such as photographs influences the perception of spoken words, the effects of auditory and visual (written or illustrated) information have not been directly compared. In the present study, native Japanese speakers judged whether or not a spoken word was a geminate word after hearing/seeing a prime word/pseudoword that contained either singleton or geminate feature. The results indicate the spoken words, written words and even illustrations presented prior to the target sounds, can guide boundary shift for Japanese geminate perception. Significantly, the influence of auditory information is independent of the lexical status of the primes, that is, both word and pseudoword auditory primes with geminate sound features induced a significant bias. On the other hand, visual primes induced the bias only when the primes coincided lexically with the targets, indicating the influence of visual information on geminate perception is different from auditory information.

- 著者

- Charles Spence

- 出版者

- ACOUSTICAL SOCIETY OF JAPAN

- 雑誌

- Acoustical Science and Technology (ISSN:13463969)

- 巻号頁・発行日

- vol.41, no.1, pp.6-12, 2020-01-01 (Released:2020-01-06)

- 参考文献数

- 98

- 被引用文献数

- 2 15

The last few years have seen an explosion of interest from researchers in the crossmodal correspondences, defined as the surprising connections that the majority of people share between seemingly-unrelated stimuli presented in different sensory modalities. Intriguingly, many of the crossmodal correspondences that have been documented/studied to date have involved audition as one of the corresponding modalities. In fact, auditory pitch may well be the single most commonly studied dimension in correspondences research thus far. That said, relatively separate literatures have focused on the crossmodal correspondences involving simple versus more complex auditory stimuli. In this review, I summarize the evidence in this area and consider the relative explanatory power of the various different accounts (statistical, structural, semantic, and emotional) that have been put forward to explain the correspondences. The suggestion is made that the relative contributions of the different accounts likely differs in the case of correspondences involving simple versus more complex stimuli (i.e., pure tones vs. short musical excerpts). Furthermore, the consequences of presenting corresponding versus non-corresponding stimuli likely also differ in the two cases. In particular, while crossmodal correspondences may facilitate binding (i.e., multisensory integration) in the case of simple stimuli, the combination of more complex stimuli (such as, for example, musical excerpts and paintings) may instead be processed more fluently when the component stimuli correspond. Finally, attention is drawn to the fact that the existence of a crossmodal correspondence does not in-and-of-itself necessarily imply that a crossmodal influence of one modality on the perception of stimuli in the other will also be observed.