1 0 0 0 OA サイバー空間とフィジカル空間を癒合するアメーバ計算パラダイム

- 著者

- 青野 真士 鯨井 悠生 野﨑 大幹

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.33, no.5, pp.561-569, 2018-09-01 (Released:2020-09-29)

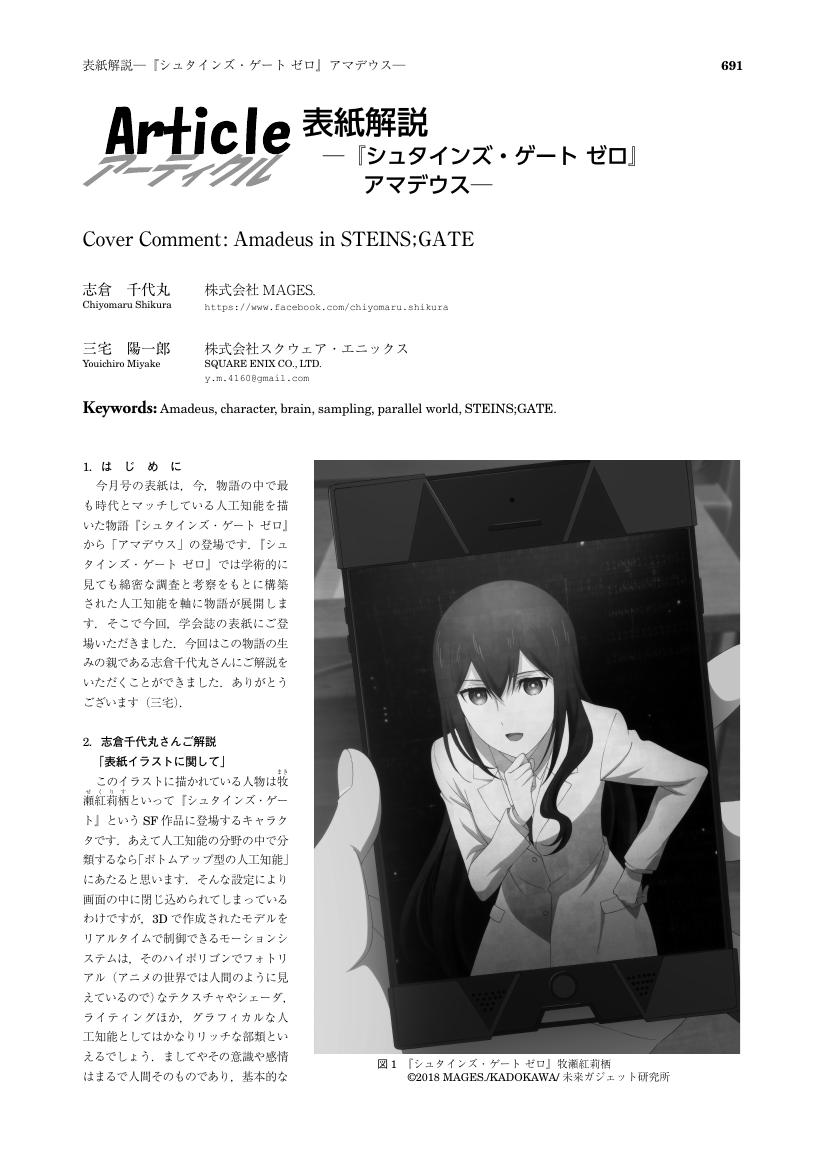

1 0 0 0 OA アーティクル 表紙解説 ─『シュタインズ・ゲート ゼロ』アマデウス─

- 著者

- 志倉 千代丸 三宅 陽一郎

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.33, no.5, pp.691-692, 2018-09-01 (Released:2020-09-29)

1 0 0 0 OA 認知科学における心理実験(「認知科学」〔第7回〕)

- 著者

- 市川 伸一 伊藤 毅志

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.17, no.1, pp.77-83, 2002-01-01 (Released:2020-09-29)

1 0 0 0 OA ハイパースペクトル画像処理が拓く新しい地球観測(<特集>宇宙に挑む人工知能技術)

- 著者

- 横矢 直人 岩崎 晃

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.29, no.4, pp.357-365, 2014-07-01 (Released:2020-09-29)

- 被引用文献数

- 1

1 0 0 0 OA 強化学習を用いた金融市場取引戦略の獲得と分析(<特集>ファイナンスにおける人工知能応用)

- 著者

- 松井 藤五郎 後藤 卓

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.24, no.3, pp.400-407, 2009-05-01 (Released:2020-09-29)

1 0 0 0 OA 並列論理型言語GHCとそのプログラミング技術 (<特集>「第五世代コンピュータ」)

- 著者

- 藤田 博 奥村 晃 上田 和紀

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.4, no.3, pp.258-264, 1989-05-20 (Released:2020-09-29)

1 0 0 0 環境へと開く脳・身体 —ザリガニとソフトロボットタコ腕—

- 著者

- 加賀谷 勝史

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.37, no.6, pp.721-726, 2022-11-01 (Released:2022-11-01)

1 0 0 0 OA 探索の高速化とその限界 (<小特集>「推論の高速化技術」)

- 著者

- 茨木 俊秀

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.6, no.1, pp.15-23, 1991-01-01 (Released:2020-09-29)

1 0 0 0 OA コンピュータ囲碁研究 (<小特集>「ゲームプログラミング」)

- 著者

- 斉藤 康己

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.10, no.6, pp.860-870, 1995-11-01 (Released:2020-09-29)

- 著者

- 佐久間 洋司

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.32, no.6, pp.1012-1014, 2017-11-01 (Released:2020-09-29)

- 著者

- 佐倉 統

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.18, no.2, pp.145-152, 2003-03-01 (Released:2020-09-29)

1 0 0 0 OA 萩谷昌己,横森 貴共編 : DNAコンピュータ,培風館(2001)

- 著者

- 上田 和紀

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.18, no.1, pp.102, 2003-01-01 (Released:2020-09-29)

1 0 0 0 OA AI エージェントの社会実装における論点の整理 —「AI さくらさん」の事例から—

- 著者

- 藤堂 健世 佐久間 洋司 大澤 博隆 清田 陽司

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.35, no.5, pp.638-642, 2020-09-01 (Released:2020-11-02)

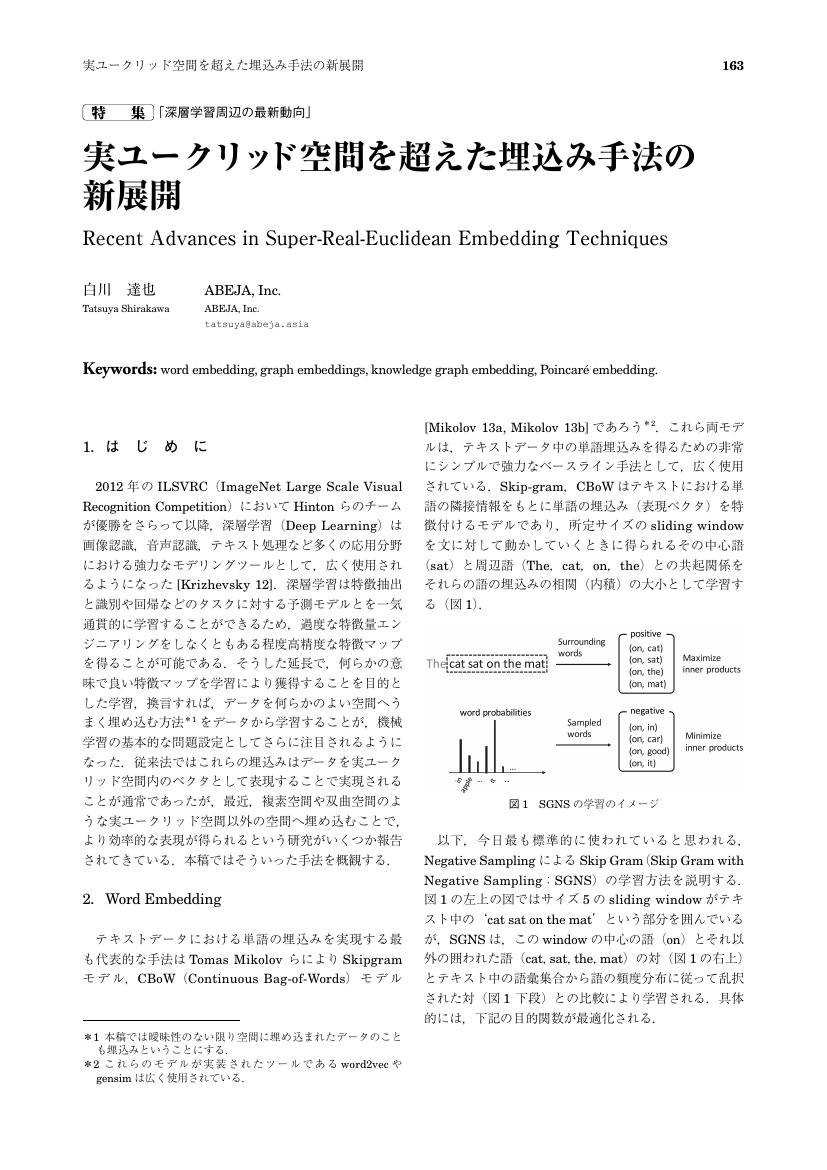

1 0 0 0 OA 実ユークリッド空間を超えた埋込み手法の新展開

- 著者

- 白川 達也

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.33, no.2, pp.163-169, 2018-03-01 (Released:2020-09-29)

1 0 0 0 OA 特集「Affective Computing」にあたって

- 著者

- 寺田 和憲 熊野 史朗 田和辻 可昌

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.36, no.1, pp.2-3, 2021-01-01 (Released:2021-01-01)

1 0 0 0 OA 私のブックマーク「機械学習における解釈性」

- 著者

- 原 聡

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.33, no.3, pp.366-369, 2018-05-01 (Released:2020-09-29)

- 被引用文献数

- 2

1 0 0 0 OA 特集:「データエコシステム」特集「データエコシステム」にあたって

- 著者

- 早矢仕 晃章 坂地 泰紀 深見 嘉明

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.37, no.5, pp.548-549, 2022-09-01 (Released:2022-09-01)

- 著者

- 鈴木 貴之 大澤 博隆 清田 陽司 三宅 陽一郎 大内 孝子

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.37, no.5, pp.649-660, 2022-09-01 (Released:2022-09-01)

- 著者

- 荒井 幸代 宮崎 和光 小林 重信

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.13, no.4, pp.609-618, 1998-07-01 (Released:2020-09-29)

- 被引用文献数

- 1

Most of multi-agent systems have been developed in the field of Distributed Artificial Intelligence (DAI) whose schemes are based on plenty of pre-knowledge of the agents' world or organized relationships among the agents. However, these kind of knowledge would not be always available. On the other hand, multi-agent reinforcement learning is worth considering to realize the cooperative behavior among the agents with littls pre-knowledge. There are two main problems to be considered in multi-agent reinforcement learning. One is the uncertainty of state transition problem which is owing to concurrent learning of the agents. And the other is the perceptual aliasing problem which is generally held in such a world. Therefore, the robustness and flexibility are essential for the multi-agent reinforcement learning toward these two problems. In this paper, we evaluate Q-learning and Profit Sharing as the method for multi-agent reinforcement learning through some experiments. We take up the Pursuit Problem as one of the multi-agent world. In the experiments, we do not assume the existence of any pre-defined relationship among agents or any control knowledge for cooperation. Learning agents do not share sensation, episodes and policies. Each agent learns through its own episodes independent of the others. The result of experiments shows that cooperative behaviors emerge clearly among Profit Sharing hunters who are not influenced by concurrent learning even when the prey has the certain escaping way against the hunters. Moreover, they behave rational under the perceptual aliasing areas. On the other hand, Q-learning hunters can not make any policy in such a world. Through these experiments, we conclude that Profit Sharing has the good properties for multi-agent reinforcement learning because or its rubustness for the change of other agents' policies and the limitation of agent's sensing abilities.

- 著者

- 松香 敏彦 川端 良子

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.31, no.1, pp.67-73, 2016-01-01 (Released:2020-09-29)