266 0 0 0 OA 応用数理の遊歩道(26) : 情報幾何の生い立ち

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本応用数理学会

- 雑誌

- 応用数理 (ISSN:24321982)

- 巻号頁・発行日

- vol.11, no.3, pp.253-256, 2001-09-14 (Released:2017-04-08)

48 0 0 0 OA 情報幾何学

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本応用数理学会

- 雑誌

- 応用数理 (ISSN:24321982)

- 巻号頁・発行日

- vol.2, no.1, pp.37-56, 1992-03-16 (Released:2017-04-08)

- 参考文献数

- 26

Information geometry is a new theoretical method to elucidate intrinsic geometrical structures underlying information systems. It is applicable to wide areas of information sciences including statistics, information theory, systems theory, etc. More concretely, information geometry studies the intrinsic geometrical structure of the manifold of probability distributions. It is found that the manifold of probability distributions leads us to a new and rich differential geometrical theory. Since most of information sciences are closely related to probability distributions, it gives a powerful method to study their intrinsic structures. A manifold consisting of a smooth family of probability distributions has a unique invariant Riemannian metric given by the Fisher information. It admits a one-parameter family of invariant affine connections, called the α-connection, where α and-α-connections are dually coupled with the Riemannian metric. The duality in affine connections is a new concept in differential geometry. When a manifold is dually flat, it admits an invariant divergence measure for which a generalized Pythagorian theorem and a projection theorem hold. The dual structure of such manifolds can be applied to statistical inference, multiterminal information theory, control systems theory, neural networks manifolds, etc. It has potential ability to be applied to general disciplines including physical and engineering sciences.

46 0 0 0 OA 自然勾配学習法-学習空間の幾何学

- 著者

- 甘利 俊一

- 出版者

- 公益社団法人 計測自動制御学会

- 雑誌

- 計測と制御 (ISSN:04534662)

- 巻号頁・発行日

- vol.40, no.10, pp.735-739, 2001-10-10 (Released:2009-11-26)

- 参考文献数

- 21

41 0 0 0 OA もうちょっとだよなー,ディープラーニング

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.32, no.6, pp.827-835, 2017-11-01 (Released:2020-09-29)

40 0 0 0 OA 統計的推論の微分幾何学

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本数学会

- 雑誌

- 数学 (ISSN:0039470X)

- 巻号頁・発行日

- vol.35, no.3, pp.229-246, 1983-07-26 (Released:2008-12-25)

- 参考文献数

- 37

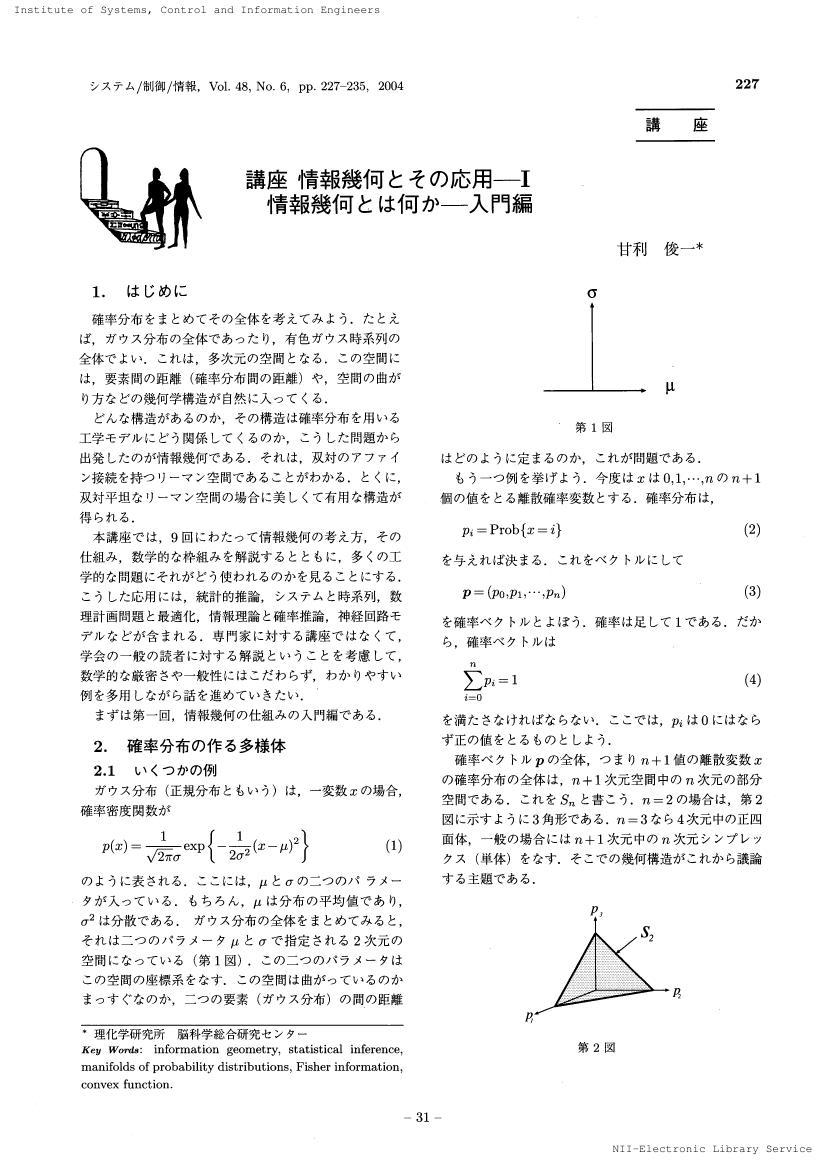

13 0 0 0 OA 講座 情報幾何とその応用-I : 情報幾何とは何か-入門編

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 システム制御情報学会

- 雑誌

- システム/制御/情報 (ISSN:09161600)

- 巻号頁・発行日

- vol.48, no.6, pp.227-235, 2004-06-15 (Released:2017-04-15)

- 参考文献数

- 2

10 0 0 0 情報幾何学

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本応用数理学会

- 雑誌

- 応用数理 (ISSN:09172270)

- 巻号頁・発行日

- vol.2, no.1, pp.37-56, 1992

- 参考文献数

- 26

- 被引用文献数

- 3

Information geometry is a new theoretical method to elucidate intrinsic geometrical structures underlying information systems. It is applicable to wide areas of information sciences including statistics, information theory, systems theory, etc. More concretely, information geometry studies the intrinsic geometrical structure of the manifold of probability distributions. It is found that the manifold of probability distributions leads us to a new and rich differential geometrical theory. Since most of information sciences are closely related to probability distributions, it gives a powerful method to study their intrinsic structures. A manifold consisting of a smooth family of probability distributions has a unique invariant Riemannian metric given by the Fisher information. It admits a one-parameter family of invariant affine connections, called the α-connection, where α and-α-connections are dually coupled with the Riemannian metric. The duality in affine connections is a new concept in differential geometry. When a manifold is dually flat, it admits an invariant divergence measure for which a generalized Pythagorian theorem and a projection theorem hold. The dual structure of such manifolds can be applied to statistical inference, multiterminal information theory, control systems theory, neural networks manifolds, etc. It has potential ability to be applied to general disciplines including physical and engineering sciences.

8 0 0 0 OA 応用数理の遊歩道(24) : 数理工学を求めて

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本応用数理学会

- 雑誌

- 応用数理 (ISSN:24321982)

- 巻号頁・発行日

- vol.11, no.1, pp.73-76, 2001-03-15 (Released:2017-04-08)

7 0 0 0 OA 聖徳太子をつくる技術:独立成分解析:多変量データの新しい分解法

7 0 0 0 OA 講座 情報幾何とその応用-I : 情報幾何とは何か-入門編

- 著者

- 甘利 俊一

- 出版者

- システム制御情報学会

- 雑誌

- システム/制御/情報 : システム制御情報学会誌 (ISSN:09161600)

- 巻号頁・発行日

- vol.48, no.6, pp.227-235, 2004-06-15

- 参考文献数

- 2

6 0 0 0 OA 神経多様体の特異点と学習

- 著者

- 甘利 俊一 尾関 智子 朴 慧暎

- 出版者

- 日本神経回路学会

- 雑誌

- 日本神経回路学会誌 (ISSN:1340766X)

- 巻号頁・発行日

- vol.10, no.4, pp.189-200, 2003-12-05 (Released:2011-03-14)

- 参考文献数

- 40

- 被引用文献数

- 2 2

多層パーセプトロンなどの神経回路網の全体を多様体として幾何学的に考察するとき, ここには階層構造に由来する特異点が本質的に含まれることがわかる. これには, 学習の遅滞, 精度の劣化など, 実際の多くの問題が関係している. 本稿は, 主に日本で発展している, 特異構造を含むモデルの統計的推論と学習のダイナミックスを取り扱い, その考え方を示し, 現在までに著者らが得ている研究の成果と構想について解説する.

6 0 0 0 情報幾何学と力学系

- 著者

- 藤原 彰夫 甘利 俊一

- 出版者

- システム制御情報学会

- 雑誌

- システム/制御/情報 : システム制御情報学会誌 = Systems, control and information (ISSN:09161600)

- 巻号頁・発行日

- vol.39, no.1, pp.14-21, 1995-01

- 参考文献数

- 37

5 0 0 0 OA ニューラルネットワーク研究の過去,現在,将来 (<特集>「ニューラルネットワーク」)

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 人工知能学会

- 雑誌

- 人工知能 (ISSN:21882266)

- 巻号頁・発行日

- vol.4, no.2, pp.120-127, 1989-03-20 (Released:2020-09-29)

5 0 0 0 もうちょっとだよなー,ディープラーニング

- 著者

- 甘利 俊一 理化学研究所脳科学総合研究センター

- 雑誌

- 人工知能

- 巻号頁・発行日

- vol.32, 2017-11-01

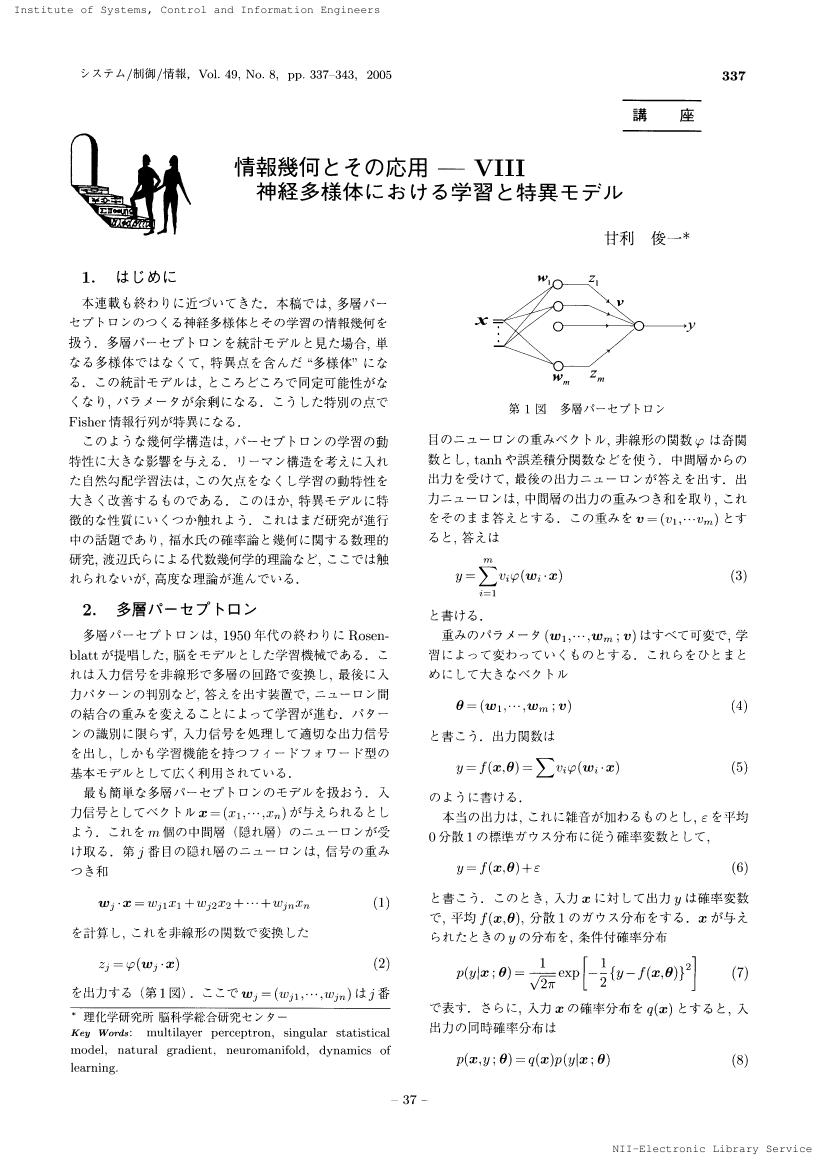

4 0 0 0 OA 情報幾何とその応用 : VIII神経多様体における学習と特異モデル

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 システム制御情報学会

- 雑誌

- システム/制御/情報 (ISSN:09161600)

- 巻号頁・発行日

- vol.49, no.8, pp.337-343, 2005-08-15 (Released:2017-04-15)

3 0 0 0 OA 深層学習と認知・理解

- 著者

- 甘利 俊一

- 出版者

- 日本認知科学会

- 雑誌

- 認知科学 (ISSN:13417924)

- 巻号頁・発行日

- vol.29, no.1, pp.5-13, 2022-03-01 (Released:2022-03-15)

- 参考文献数

- 1

Deep learning makes it possible to recognize patterns, play games, process and translate sentences, or do other works by learning from examples. It sometimes outperforms humans for some specific problems. Then, there naturally arises a fundamental question how different are the ways of information processing in deep learning and humans. To answer this question, we recapitulate the history of AI and deep learning shortly. We then show that deep learning generates very high-dimensional experimental formulae of interpolation and extrapolation. Humans do similar, but after finding the experimental formulae, they search for the reasons why such formulae work well. Humans search for fundamental principles underlying phenomena in the environment whereas deep learning does not. Humans cognize and understand the world they live in with consciousness. Furthermore, humans have a mind. Humans have obtained mind and consciousness through a long history of evolution, which deep learning does not. What is the role of mind and consciousness for cognition and understanding? The human brain has an excellent ability of prediction (as well as other animals), which is fundamental for surviving in the severe environment. However, humans have developed the ability of postdiction, which reviews the action plan based on a prediction before execution by integrating various pieces of evidence. This is an important function of consciousness, which deep learning does not have.

3 0 0 0 OA 神経回路網の自己組織と神経場のパターン力学

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 日本生物物理学会

- 雑誌

- 生物物理 (ISSN:05824052)

- 巻号頁・発行日

- vol.21, no.4, pp.210-218, 1981-07-25 (Released:2009-05-25)

- 参考文献数

- 5

The neural system is believed to have such a capacity for self-organization that it can modify its structures or behavior in adapting to the information structures of the environment. We have constructed a mathematical theory of self-organizing nerve nets, with the aim of elucidating the modes and capabilities of this peculiar information processing in nerve nets.We first present a unified theoretical framework for analyzing learning and selforganization of a system of neurons with modifiable synapses, which receive signals from a stationary information source. We consider the dynamics of self-organization, which shows how the synaptic weights are modified, together with the dynamics of neural excitation patterns. It is proved that a neural system has the ability automatically to form, by selforganization, detectors or processors for every signal included in the information source of the environment.A model of self-organization in nerve fields is then presented, and the dynamics of pattern formation is analyzed in nerve fields. The theory is applied to the formation of topographic maps between two nerve fields. It is shown that under certain conditions columnar microstructures are formed in nerve fields by self-organization.

3 0 0 0 OA 情報幾何とその応用-II : 凸解析と双対平坦空間

- 著者

- 甘利 俊一

- 出版者

- 一般社団法人 システム制御情報学会

- 雑誌

- システム/制御/情報 (ISSN:09161600)

- 巻号頁・発行日

- vol.48, no.8, pp.340-347, 2004-08-15 (Released:2017-04-15)

- 参考文献数

- 2

3 0 0 0 OA 「人工知能と脳科学:人間にどこまで迫れるか」

- 著者

- 甘利 俊一

- 出版者

- 人工知能学会

- 雑誌

- 2018年度人工知能学会全国大会(第32回)

- 巻号頁・発行日

- 2018-04-12

2 0 0 0 OA 人工知能

- 著者

- 南雲 仁一 甘利 俊一 中野 馨

- 出版者

- 公益社団法人 計測自動制御学会

- 雑誌

- 計測と制御 (ISSN:04534662)

- 巻号頁・発行日

- vol.11, no.1, pp.58-68, 1972 (Released:2009-11-26)

- 参考文献数

- 76

- 被引用文献数

- 1