1 0 0 0 「来歴」論~意識,身体,創発

- 著者

- 下條 信輔

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.39, no.1, pp.50-55, 2021 (Released:2021-01-25)

- 参考文献数

- 18

1 0 0 0 動的な環境における自律移動ロボットの行動制御

- 著者

- 浅香 俊一 石川 繁樹

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.12, no.4, pp.77-83, 1994-05-15

- 被引用文献数

- 5 14

We report a developed autonomous mobile robot (AMR) in dynamically changing environment. The AMR's behavior controller is based upon a state-transition scheme, which suits for realizing the AMR behavior control according to sensory information. The network of state-transition, however, becomes very large as behaviors become complicated. We divide the network among multiple tasks in order to suppress increase of complexity in single network. The tasks consists of a supervisor task and functional tasks. A supervisor task watches overall statuses and events, and controls functional tasks. Each functional task controls a specified part of the AMR's behavior. We constructed a real AMR system, on which the behavior control method is applied and it demonstrated in a showroom as a greeter robot.

1 0 0 0 OA 明示・暗示ダイナミクス変数を用いた多様な環境下での動作学習

- 著者

- 室岡 貴之 濱屋 政志 フェリックス フォン ドリガルスキ 田中 一敏 井尻 善久

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.39, no.2, pp.177-180, 2021 (Released:2021-03-24)

- 参考文献数

- 10

The recent growth of robotic manipulation has resulted in the realization of increasingly complicated tasks, and various kinds of learning-based approaches for planning or control have been proposed. However, learning-based approaches which can be applied to multiple environments are still an active topic of research. In this study, we aim to realize tasks in a wide range of environments by extending conventional learning-based approaches with parameters which describe various dynamics explicitly and implicitly. We applied our proposed method to two state-of-the-art learning-based approaches: deep reinforcement learning and deep model predictive control, and realized two types of non-prehensile manipulation tasks: a cart pole and object pushing, the dynamics of which are difficult to model.

1 0 0 0 OA 「構造材料技術の最先端―ロボット工学者のための先進材料入門―」特集について

- 著者

- 荒井 裕彦

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.13, no.2, pp.161, 1995-03-15 (Released:2010-08-25)

1 0 0 0 OA 転倒・骨折者へのインタビューデータを用いた転倒実態調査手法の検討

- 著者

- 内山 瑛美子 高野 渉 中村 仁彦 今枝 秀二郞 孫 輔卿 松原 全宏 飯島 勝矢

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.39, no.2, pp.189-192, 2021 (Released:2021-03-24)

- 参考文献数

- 5

Interview survey is one of the options for investigations with light loads on participants to study how people fall compared with measurements by many sensors. In this paper, we aimed at predicting fall patterns from interview text data. We use k-means clustering method to confirm the validity of the labels attached to the interview data, and also confirmed the validity of the summaries of the interview data by interviewer researchers by focusing on the co-occurrence word analysis. After confirming the validity of the labels and summaries, we construct a naive Bayes model classifiers to classify the fall patterns. The average classification rate was 61.1% for 3 types of falls - falls by an unexpected external force, by losing balance or supports, and by other reasons.

1 0 0 0 OA 人体構造に示唆を得た筋骨格型ヒューマノイドの構成と設計

- 著者

- 水内 郁夫

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.28, no.6, pp.689-694, 2010 (Released:2012-01-25)

- 参考文献数

- 51

- 被引用文献数

- 2 3

1 0 0 0 OA 人型ロボットの歩行制御

- 著者

- 山本 江

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.36, no.2, pp.103-109, 2018 (Released:2018-04-15)

- 参考文献数

- 38

1 0 0 0 OA 行動学習とカオス

- 著者

- 三上 貞芳

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.15, no.8, pp.1118-1121, 1997-11-15 (Released:2010-08-25)

- 参考文献数

- 21

1 0 0 0 OA 表情豊かな顔ロボットの開発と受付システムの実現

- 著者

- 小林 宏

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.24, no.6, pp.708-711, 2006-09-15 (Released:2010-08-25)

- 参考文献数

- 4

- 被引用文献数

- 6 2

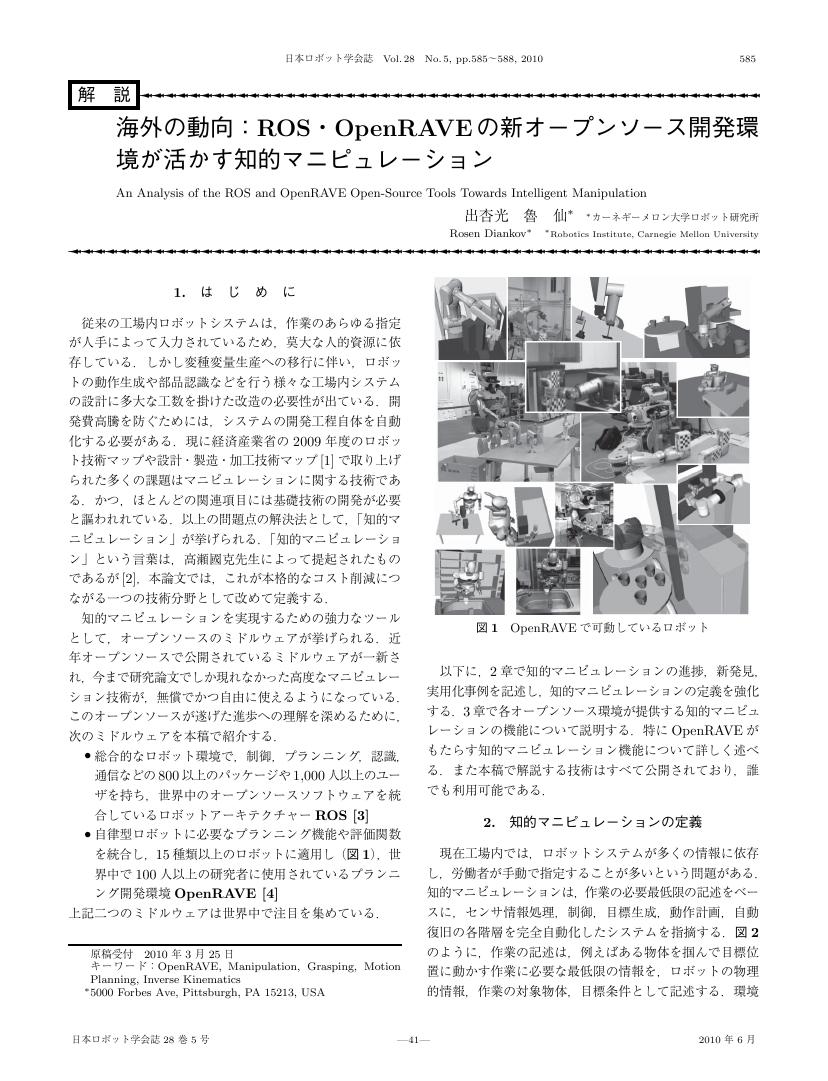

- 著者

- 出杏光 魯仙

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.28, no.5, pp.585-588, 2010 (Released:2012-01-25)

- 参考文献数

- 8

- 被引用文献数

- 2

1 0 0 0 OA 運動学習プリミティブを用いたロボットの見まね学習

- 著者

- 中西 淳 Auke Jan Ijspeert Stefan Schaal Gordon Cheng

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.22, no.2, pp.165-170, 2004-03-15 (Released:2010-08-25)

- 参考文献数

- 25

- 被引用文献数

- 5 4

1 0 0 0 OA ニューロモーフィックコンピューティングを支えるハードウェア技術の現状と研究動向

- 著者

- 岡崎 篤也

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.35, no.3, pp.209-214, 2017 (Released:2017-05-15)

- 参考文献数

- 21

- 被引用文献数

- 1

- 著者

- 加美 聡哲 原田 勇希 山口 智香 岡田 佳都 大野 和則 田所 諭

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.37, no.8, pp.735-743, 2019 (Released:2019-10-18)

- 参考文献数

- 19

In our study, we developed AED Gripping System for AED transport UAV in order to deliver quickly to remote places. This system is structured by grippers and a gripping determination system. We introduced Permanent Electromagnets as gripper. The gripping determination system is necessary to confirm that the AED is delivered at the destination, because AED transport UAV flies to remote places which the pilot cannot see the UAV. We developed a new gripping determination system which use the difference of convergence time of counter-electromotive force between gripping or not. We conducted flight experiments and confirmed that the AED Gripping System do not drop the AED accidentally and can confirm gripping status during flight.

1 0 0 0 OA 冗長性を有するロボットの制御

- 著者

- 吉川 恒夫

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.2, no.6, pp.587-592, 1984-12-30 (Released:2010-08-25)

- 参考文献数

- 23

- 被引用文献数

- 2 4

- 著者

- 中臺 一博 日台 健一 溝口 博 奥乃 博 北野 宏明

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.21, no.5, pp.517-525, 2003-07-15

- 参考文献数

- 11

- 被引用文献数

- 6 3

This paper describes a real-time human tracking system by audio-visual integrtation for the humanoid <I>SIG</I>. An essential idea for real-time and robust tracking is hierarchical integration of multi-modal information. The system creates three kinds of streams - auditory, visual and associated streams. An auditory stream with sound source direction is formed as temporal series of events from audition module which localizes multiple sound sources and cancels motor noise from a pair of microphones. A visual stream with a face ID and its 3D-position is formed as temporal series of events from vision module by combining face detection, face identification and face localization by stereo vision. Auditory and visual streams are associated into an associated stream, a higher level representation according to their proximity. Because the associated stream disambiguates parcially missing information in auditory or visual streams, “focus-of-attention” control of <I>SIG</I> works well enough to robust human tracking. These processes are executed in real-time with the delay of 200 msec using off-the-shelf PCs distributed via TCP/IP. As a result, robust human tracking is attained even when the person is visually occluded and simultaneous speeches occur.

1 0 0 0 OA 犬型ロボットAIBOと新ロボット産業

- 著者

- 土井 利忠

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.30, no.10, pp.1000-1001, 2012 (Released:2013-01-25)

- 参考文献数

- 3

- 被引用文献数

- 2 2

1 0 0 0 OA レスキューロボットの技術チャレンジ

- 著者

- 田所 諭

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.28, no.2, pp.134-137, 2010 (Released:2012-01-25)

- 参考文献数

- 2

- 被引用文献数

- 3 14

1 0 0 0 OA 小惑星探査ローバ「ミネルバ2」の開発

- 著者

- 吉光 徹雄 久保田 孝

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.38, no.1, pp.54-55, 2020 (Released:2020-01-16)

- 被引用文献数

- 1

1 0 0 0 OA 21世紀はロボットの世紀

- 著者

- 井口 雅一

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.4, no.6, pp.640, 1986-12-15 (Released:2010-08-25)

1 0 0 0 OA The Cog Project

- 著者

- Rodney A. Brooks

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.15, no.7, pp.968-970, 1997-10-15 (Released:2010-08-25)

- 参考文献数

- 9

- 被引用文献数

- 10 23

We are building a humanoid robot at the MIT Artificial Intelligence Laboratory. It is a legless human sized robot and is meant to be able to emulate human functionality. We are particularly interested in using it as vehicle to understand how humans work, by trying to combine many theories from artificial intelligence, cognitive science, physiology and neuroscience. While undergoing continual revisions in its hardware and software the robot has been operational in one form or another for over three years. We are working on systems that emulate both human development and human social interactions.