2 0 0 0 OA 宇宙ロボットの未来像

- 著者

- 中谷 一郎

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.27, no.5, pp.518-522, 2009 (Released:2011-11-15)

- 参考文献数

- 8

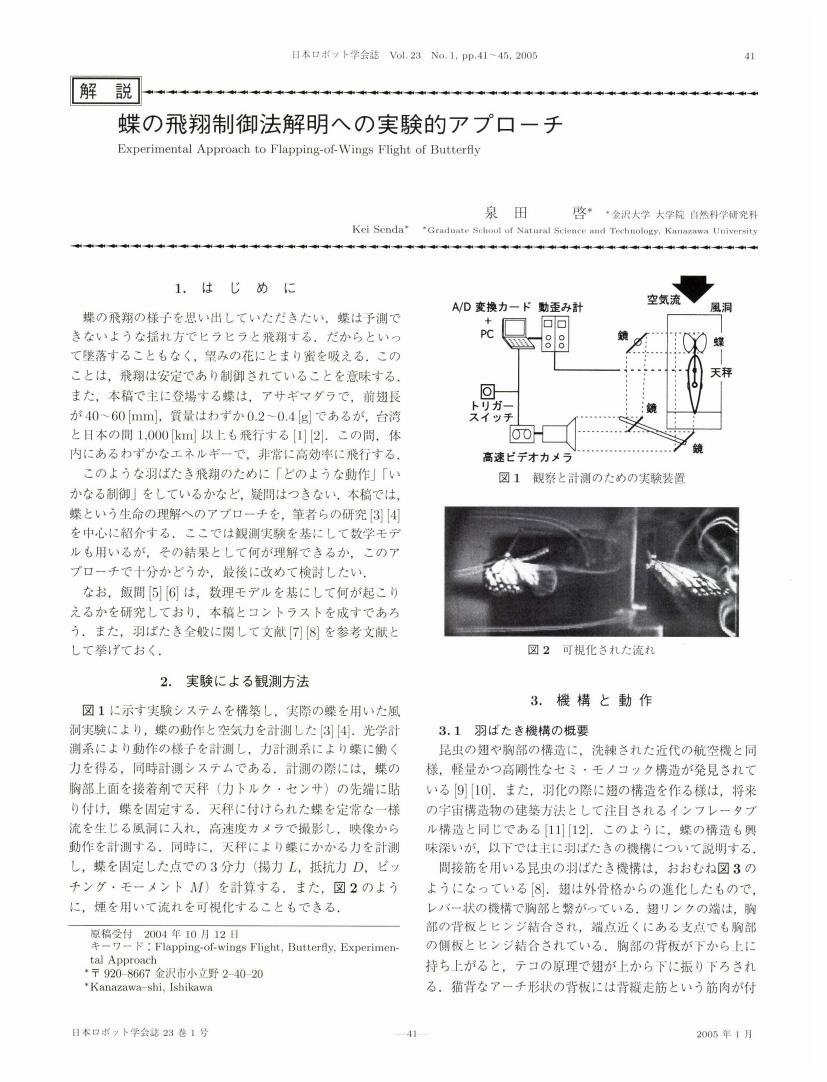

2 0 0 0 OA 蝶の飛翔制御法解明への実験的アプローチ

- 著者

- 泉田 啓

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.23, no.1, pp.41-45, 2005-01-15 (Released:2010-08-25)

- 参考文献数

- 20

- 被引用文献数

- 3

2 0 0 0 OA 油圧による柔軟で機動性の高い多脚ロボットの実現

- 著者

- 玄 相昊

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.37, no.2, pp.150-155, 2019 (Released:2019-03-20)

- 参考文献数

- 22

2 0 0 0 OA 次世代アクチュエータが切り拓く新しいロボティクス

- 著者

- 鈴森 康一

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.33, no.9, pp.656-659, 2015 (Released:2015-12-15)

- 参考文献数

- 14

- 被引用文献数

- 3 5

2 0 0 0 OA フレンチバカンスの哲学

- 著者

- 林部 充宏

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.31, no.10, pp.981-982, 2013 (Released:2014-01-15)

- 参考文献数

- 1

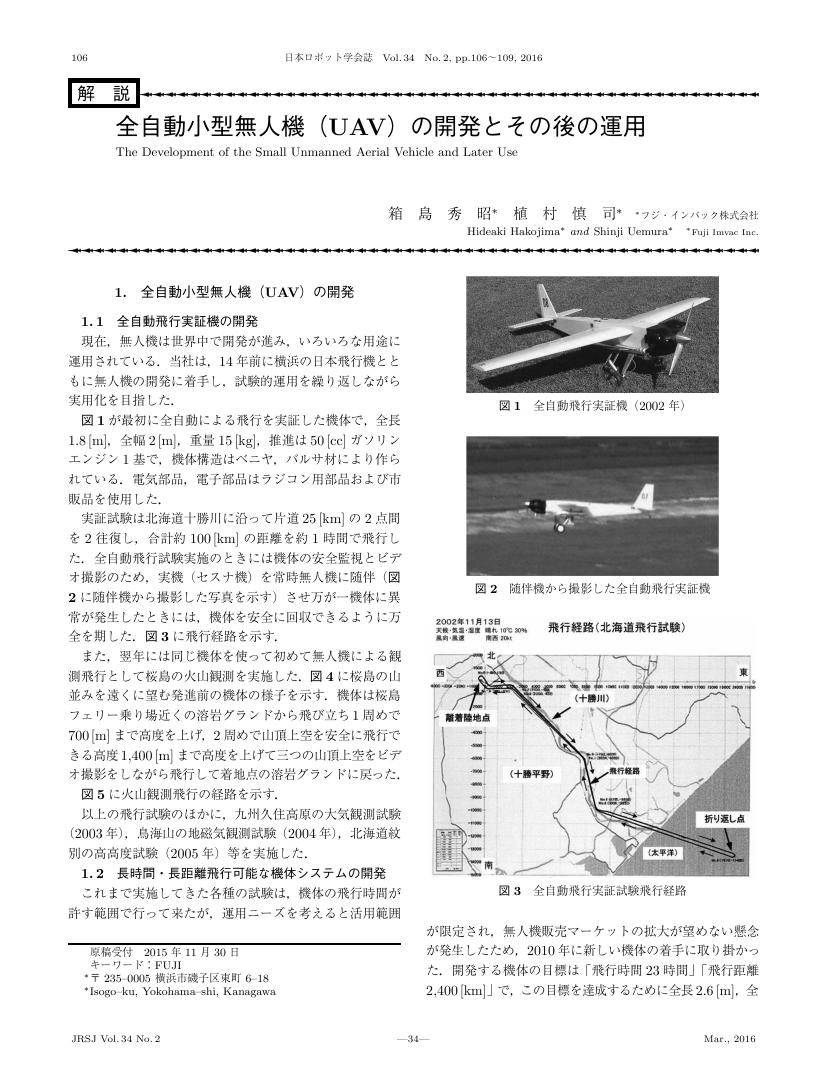

2 0 0 0 OA 全自動小型無人機(UAV)の開発とその後の運用

- 著者

- 箱島 秀昭 植村 慎司

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.34, no.2, pp.106-109, 2016 (Released:2016-04-15)

- 被引用文献数

- 2

2 0 0 0 OA 情動インタフェースのエンタテインメントとコミュニケーションへの応用

- 著者

- 福嶋 政期

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.32, no.8, pp.692-695, 2014 (Released:2014-11-15)

- 参考文献数

- 21

- 被引用文献数

- 3 4

2 0 0 0 OA 三次元ヘビロボットの推進制御と先頭位置姿勢制御

- 著者

- 山北 昌毅 橋本 実 山田 毅

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.22, no.1, pp.61-67, 2004-01-15 (Released:2010-08-25)

- 参考文献数

- 6

- 被引用文献数

- 7 13

A snake robot is a typical example of robots with redundant degree of freedom. Using input-output linearization for only movement of the head of a robot, we can control the head speed as a desired one, but eventually the robot will come to a singular posture like a straight line. In order to overcome the problem, a control with dynamic manipulability was proposed. In this paper, we propose a control technique in which a physical index of horizontal constraint force is used, and a control law for head configuration. By using these, the winding pattern with which the robot can avoid the singular posture is generated automatically and head converges to the target.

2 0 0 0 OA アフォーダンス入門―若きロボット研究者のQ&A―

- 著者

- 佐々木 正人

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.24, no.7, pp.776-782, 2006-10-15 (Released:2010-08-25)

- 被引用文献数

- 3 2

2 0 0 0 OA 把持と操りの基礎理論

- 著者

- 吉川 恒夫

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.13, no.7, pp.950-957, 1995-10-15 (Released:2010-08-10)

- 参考文献数

- 19

- 被引用文献数

- 3 9 2

2 0 0 0 OA リハビリテーションロボットと計算論的ニューロリハビリテーション

- 著者

- 井澤 淳

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.35, no.7, pp.518-524, 2017 (Released:2017-10-01)

- 参考文献数

- 48

2 0 0 0 自律移動ロボットのIoT化と新規市場創出

- 著者

- 安藤 健 上松 弘幸

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.37, no.8, pp.703-706, 2019 (Released:2019-10-18)

- 参考文献数

- 9

2 0 0 0 OA 我が国の無人航空機の歴史と今後の展望

- 著者

- 細田 慶信

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.34, no.2, pp.81-85, 2016 (Released:2016-04-15)

- 参考文献数

- 10

- 被引用文献数

- 1

2 0 0 0 ロボット紳士・淑女録

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.14, no.3, pp.337-369, 1996

2 0 0 0 OA 義足足部開発の動向

- 著者

- 遠藤 謙 菅原 祥平 北野 智士

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.32, no.10, pp.855-858, 2014 (Released:2015-01-15)

- 参考文献数

- 22

- 被引用文献数

- 1

2 0 0 0 OA RNNを備えた2体のロボット間における身体性に基づいた動的コミュニケーションの創発

- 著者

- 日下 航 尾形 哲也 小嶋 秀樹 高橋 徹 奥乃 博

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.28, no.4, pp.532-543, 2010 (Released:2012-01-25)

- 参考文献数

- 29

- 被引用文献数

- 1

We propose a model of evolutionary communication with voice signs and motion signs between two robots. In our model, a robot recognizes other's action through reflecting its self body dynamics by a Multiple Timescale Recurrent Neural Network (MTRNN). Then the robot interprets the action as a sign by its own hierarchical Neural Network (NN). Each of them modifies their interpretation of signs by re-training the NN to adapt the other's interpretation throughout interaction between them. As a result of the experiment, we found that the communication kept evolving through repeating miscommunication and re-adaptation alternately, and induced the emergence of diverse new signs that depend on the robots' body dynamics through the generalization capability of MTRNN.

2 0 0 0 OA 倒立振子型乗り物 (2) 一球車

- 著者

- 鶴賀 孝廣

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.8, no.3, pp.365, 1990-06-15 (Released:2010-08-25)

- 被引用文献数

- 1

- 著者

- 細田 耕 浅田 稔

- 出版者

- The Robotics Society of Japan

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.14, no.2, pp.313-319, 1996-03-15 (Released:2010-08-10)

- 参考文献数

- 12

- 被引用文献数

- 3 4

This paper describes an adaptive visual servoing controller consisting of an on-line estimator and a feedback/feedforward controller for uncalibrated camera-manipulator systems. The estimator does not need a priori knowledge on the kinematic structure nor parameters of the camera-manipulator system, such as camera and link parameters. The controller consists of feedforward and feedback terms to make the image features converge to the desired trajectories, by using the estimated results. Some experimental results demonstrate the validity of the proposed estimator and controller.

2 0 0 0 OA 水中ロボットにおけるテレロボティクス

- 著者

- 長谷川 昂宏 山内 悠嗣 山下 隆義 藤吉 弘亘 秋月 秀一 橋本 学 堂前 幸康 川西 亮輔

- 出版者

- 一般社団法人 日本ロボット学会

- 雑誌

- 日本ロボット学会誌 (ISSN:02891824)

- 巻号頁・発行日

- vol.36, no.5, pp.349-359, 2018 (Released:2018-07-15)

- 参考文献数

- 27

Automatization for the picking and placing of a variety of objects stored on shelves is a challenging problem for robotic picking systems in distribution warehouses. Here, object recognition using image processing is especially effective at picking and placing a variety of objects. In this study, we propose an efficient method of object recognition based on object grasping position for picking robots. We use a convolutional neural network (CNN) that can achieve highly accurate object recognition. In typical CNN methods for object recognition, objects are recognized by using an image containing picking targets from which object regions suitable for grasping can be detected. However, these methods increase the computational cost because a large number of weight filters are convoluted with the whole image. The proposed method detects all graspable positions from an image as a first step. In the next step, it classifies an optimal grasping position by feeding an image of the local region at the grasping point to the CNN. By recognizing the grasping positions of the objects first, the computational cost is reduced because of the fewer convolutions of the CNN. Experimental results confirmed that the method can achieve highly accurate object recognition while decreasing the computational cost.